Jun 3, 2016

DrivingTheFuture Musk's statement in the RECODE video all but settled it. He said it's obvious what they'll be announcing later this year. @flamingoezz already posted something similar to this, but it bears repeating, and I didn't see anyone sharing this story from Teslarati : Tesla Autopilot 2.0 hardware possibly spotted on Model S test mule , the images clearly show new autopilot hardware. And if they're testing this ALREADY, it should really be functional by launch time. Free or not, activated or not, the capability will be there.�

Jun 3, 2016

FirstSea It's obvious he is going to reveal AP V2, whether or not it includes full autonomy remains to be seen.�

Jun 3, 2016

S'toon That's because it was posted in the Model S forum, some time ago, and is a few pages in on the timeline.

Autopilot 2.0 test hardware sighting?�

Jun 3, 2016

Johan "Great" alternatives to answer. Not begging the question at all.�

Jun 3, 2016

ecarfan This is already being discussed here Nobody talking about the Full Autonomy hint from Elon at Code Conference?!�

Jun 3, 2016

favo Kudos for correct usage of the phrase. Very rare these days. �

�

Jun 3, 2016

DrivingTheFuture Yep and I gave that OP credit, but he was referring to a different comment made by elon... granted along the same lines�

Jun 3, 2016

DrivingTheFuture Hmmm... not sure how the answers "No Way" and "Most Definitely" fit the definition of "begging the question" .......where premises include the claim that the conclusion is true...

some light reading: Fallacy: Begging the Question�

Jun 3, 2016

DrivingTheFuture That's interesting but I was actually referring to the photos that only just got posted on May 26th, and show different hardware. But that's neat to see they are testing numerous systems / solutions OR that they've simply refined what they were using back in March.�

Jun 3, 2016

ohmman It's clear that the "obvious" thing they'll be announcing is a one year delay.�

Jun 3, 2016

1208 I thought it was zebra striped seat covers.�

Jun 3, 2016

jkk_ First option sounds positive, third option negative. Some of us don't need to drive our cars, we get to drive our cars.�

Jun 3, 2016

Johan Yeah that was my point - the alternatives the OP gave had very emotional connotations and hence, whether intended or not (perhaps a subconscious phenomenon), the question and its multiple choice answers was leading and implying. Perhaps not exactly the same as begging the question, but close enough.

A better label would be this:

Your logical fallacy is loaded question

If one were actually interested in people's opinion on the matter, without signaling your own bias, the answer choices would be: yes, maybe and no. Not "yes of course" and "no way, drive the sucker yourself".�

Jun 4, 2016

davidc18 I think the obvious thing is that it will have the autopilot hardware installed (activated for additional $$$). I expect it to still be version 1.0 and still be in beta but have additional sensors for better blind spot detection.�

Jun 4, 2016

deonb I think the obvious thing is: "It does what the Model S does".

Not: "It uses some new and untested, unannounced, system that may or may not be ready by then, if ever, and will have a who knows what regulatory backlash."

People have a tendency to always over-read Elon's comments. When Elon says something, he means it directly - he doesn't speak in code.�

Jun 4, 2016

DrivingTheFuture Ok I see your points now, my intent was not to create biased responses but to make the options more interesting and fun than just "yes" or "no"�

Jun 4, 2016

DrivingTheFuture Couldn't agree more. I love driving my car in certain scenarios, and I love that Elon is stating that he never intends to take that freedom away from people.�

Jun 4, 2016

DrivingTheFuture They've already announced this... why would they hold a second event to announce something they already announced??? Elon was referring to WHAT they will announce (something new) later this year when he made the "obvious" statement.�

Jun 4, 2016

Siciliano I think both the 3 and the S will have "near" full autonomy, it's simply a matter of the software being sufficiently baked within 2 years - hardware is the easy end of it.

I also highly doubt regulations will be in place by then, so they'll simply do what they've done with autopilot 1.0 - feature a disclaimer that you MUST be alert at all times with your hands on the steering wheel. End of story. That pretty effectively covers the liability end of it. Like I've said before, there currently is no regulation that states you may let your car drive itself down the freeway.... are people still doing it anyway? Absolutely.

It's funny that so many people think it's not even possible, yet even up to a few YEARS ago Google has been successfully "running" their own fleet of fully autonomous cars. They even had no problem on dirt roads, intersections, road signs, etc... Do some research. And I'd imagine they're far closer than Tesla is...aka months away, not years.�

Jun 4, 2016

DrivingTheFuture I think Tesla has leapfrogged ahead of Google, because of the shift to machine learning, the company with the most data wins... and Tesla is getting A LOT more data than Google... and accumulating more and more each day. Google's only shot now is to license their tech to car companies that already have sensors on the road in their vehicles, and Elon mentioned this is what he thinks they'll do...�

Jun 4, 2016

Siciliano Yes, this *may* be true.. but from what I've researched about Google, they're already nearly there. I'd say 90% baked. But yes, their main issue is they never took the leap into production like Tesla has.

I think they're more than happy with possessing and licensing the software end of it to ride sharing companies like lyft and uber, but it will take quite a while for regulations to allow these cars to drive themselves around without a driver inside.

I'd be very curious to know if they have any plans to license direct to car manufacturers, along with their hardware...that would make more sense since the driver would need to be present and alert, etc. I could see it now -

"The all-new 2018 fully electric Chevy Ohm, featuring Android Auto with voice activated control and Google Autopilot."�

Jun 4, 2016

Alketi I'd bet anything that's exactly what Google will do. They're going to create a software platform, let's call it "Android Autopilot" and make it available to any car manufacturer. And it will either come with, or require, a specific set of sensors.

For Tesla, I think this year's announcement will be a much upgraded highway autopilot, and that recent S/X were already shipping with the hardware, and that the 3 will get the same features.

The hardware capable of full autonomy should arrive in 2018, per Musk's prediction and coinciding with the delivery of MobileEye's next-generation chip.�

Jun 4, 2016

ecarfan That is beyond obvious: it's boring. No need to...

Exactly. Elon won't hold an "event" unless there is something important to publicize. A significant step towards full autonomy -- but not full autonomy -- would be worth having an event for.

Yes. It will likely be a major improvement in AP -- new hardware with software coming "soon" -- that will extend AP to streets with cross traffic, but as @Siciliano went on to say, the driver will still be fully responsible for the operation of the vehicle.

In addition, I think it will be announced that AP will follow the navigation route shown by the car once the driver enters a destination. That

By the end of this year, it will be just over 2 years since the first version of AP was announced in Sep. 2014. It's clearly time for a another big step forward.

Then in another 2 years, full autonomous driving capability if allowed by law.

I think AP will continue to be labeled as "beta" until the law covers it.�

Jun 4, 2016

electracity I doubt regulations/liability allow level 3 in most places. So I think the announcement is the S/X getting new AP and a path to level 3.

The new AP may allow level 3 by map coordinates. I doubt this announcement is the model 3 reveal 2, but does show the AP all car will get.

The "obvious thing" to me is new AP/major firmware release including new UI, this keeps S/X sales good going into the release of the model 3.�

Jun 4, 2016

ecarfan Based on the NHSTA definition of Level 3, laws in several US states allow it, including California, and more are sure to follow. Also, many states have no laws restricting autonomous driving of any type.

Level 3 means a licensed driver is present in the vehicle and ready to take control at any time.

U.S. Department of Transportation Releases Policy on Automated Vehicle Development | National Highway Traffic Safety Administration (NHTSA)

Limited Self-Driving Automation (Level 3): Vehicles at this level of automation enable the driver to cede full control of all safety-critical functions under certain traffic or environmental conditions and in those conditions to rely heavily on the vehicle to monitor for changes in those conditions requiring transition back to driver control. The driver is expected to be available for occasional control, but with sufficiently comfortable transition time. The Google car is an example of limited self-driving automation.�

Jun 4, 2016

gregincal The chance of full autonomy in this timeframe is just about zero. However, there are a large number of hugely significant steps between where autopilot is now and full autonomy. A big one would be the "crash proof" technology like BMW demonstrated (no matter what the driver does, the car doesn't allow itself to be crashed).�

Jun 4, 2016

JeffK Many states simply don't have laws against it, so therefore it's perfectly legal as long as you're still behind the wheel.�

Jun 4, 2016

jkk_ Any idea how it responds to bad vs worse situations? For example, it might be better to steer off road in order to avoid a moose - will it allow it?�

Jun 4, 2016

Johan In such an instance yes, I think it will prioritize avoiding the collision even if it means venturing off the road.

The real interesting situation is of course if the systems knows in advance it is going to collide with either A or B and is in the position where a choice is possible to make (just keep going is then also a choice), and A and B are identified with high probability as pedestrians both.�

Jun 4, 2016

James Anders My guess is the 3 will have the necessary hardware but the software will be lagging. Musk said he expected at least 3 years for regulatory approvals etc. Hardware in place, software functionality will improve until full autonomy in about 4-5 years.�

Jun 4, 2016

Johan I'm going to go out on a limb and predict that we shouldn't expect level 4 autonomous from Tesla until we see them fix basic nav and media software.�

Jun 4, 2016

Chewy3 What is the likely hood that Tesla builds the Model 3 with MobilEye's next gen chip and sensors BEFORE they master autonomous driving?

Most people agree its very unlikely they will have level 4 by the time the 3 rolls out, but the hardware that's expected to be able to handle it is already announced. Smart engineers will build with the best hardware they can, and ramp up the software to match it as time goes on.

Am I wrong in assuming they already know which sensors they need and its more of a journey to optimize the software in the car to detect & determine correct actions?�

Jun 4, 2016

melindav that and from my understanding Google is banking on their 3D mapping. It would be fantastic if you use the future google product in the bay area. Not so much in Nebraska. But Tesla's system instead is going toward the computer being able to control the car even if it's never seen that specific road before.�

Jun 4, 2016

cronosx I think google and tesla are taking the opposite side of the AP possibility.

One is "think at the moment" ( tesla ), the other is "i already know all of it so i know what to do", of course this is the extreme of the concept, but the point is, if we design a line when on the left side is "think at the moment" and at the right side we have "use the database", tesla is a the left side going to right, google is at the right side going to left.

Wich for both is the better way to do, since tesla could get a system wich continue to get better but it is already usable, while google has no need to rush and has a huge platform where store and analyze the data.

At the end, google is the better approach, if you want a fully autonomus drive, but then, tesla for now want simply to get better and better until the google approach is ready and well solved so it can be merged with the other.

So, about the topic, i think they know what HW is needed by now, they have reasonable security on that, but i don't think it can be definitive.. i mean.. when the law will be ready, maybe they want an additional cpu/sensor/something maybe standard for all as safeguard to let the car drive itself, and of course they can't know now because the law is not set. So, for an AP wich require you to stay alert and take reponsability.. surely they know what to do, for the rest..

And of course we are talking about HW, the software could take another couple of year.. who care? the most important thing is that we have the final HW and not gettint to the point were "ah.. we are sorry, but you can't have the AP v2.3 since you don't have that sensor, if you want it is 4000$", that would make me very very sad.�

Jun 4, 2016

DrivingTheFuture I was thinking of the only draw back to this capability and autopilot the other day... what if you are being chased by ill intentioned criminals or there's an emergency situation, and in either case the only escape route is by driving through a fence or baricade... and instead of plowing through said obstacle the safety emergency braking feature keeps slamming on the brakes every time you try to drive forward haha. I hope they install an emergency off switch for those rare instances...�

Jun 4, 2016

JeffK It supposedly disengages any automatic braking when you hit the gas.�

Jun 4, 2016

Alketi The Mobileye EyeQ4 is expected to be released in volume production in 2018.

Mobileye said it secured the first design win for EyeQ4 from a European car manufacturer, with production to start in early 2018. The EyeQ4 is expected to be available in volume in 2018.

So, unfortunately, this won't be available for start-of-production for the Model 3.

I think, depending on cost of course, that Tesla would ship the hardware earlier than the software -- but I still wouldn't expect to see the hardware for full-autonomy until the 2018 timeframe.

But, I do think we'll get our peek at Autopilot 2.0 at the end of this year and that it'll be shipping in the S/X before then.�

Jun 4, 2016

Johan Braking is seen as a defensive act but accelerating (hitting the gas) as an offensive manoeuvre. It's then common for humans, in the example the auto pilot engineers, to program things so that the offensive act overrides the defensive one. This is probably a form of cognitive slippage from the engineers. I'm quite certain that real world data will show that there's no inherent reason to allow the human to override the autonomous safety system - it seems to me that if/when the artificial system outperforms the human consistently it would be dumb to let the human oversteer it?�

Jun 4, 2016

FirstSea So most states can't prohibit lv 3 autonomy, but what about insurance companies, could they try to use it to raise the premium on Tesla vehicles?�

Jun 4, 2016

EinSV If it reduces the number and severity of accidents, which it should, insurance rates should be the same or lower.�

Jun 4, 2016

cronosx and this is a great problem.. it's understandable, but then, if you hit wrong, you hit the gas insted of the breaking, thus disabling the emergency break, and of course, while you drive you are constantly pushing the gas, thus makeing useless the emergency brake.

i don't think it's so easy to fix this issue, but then, it's not our problem to solve now isn't it? �

�

Jun 4, 2016

ecarfan That has already been in p,ace since the debut of AP; push on the accelerator or the brake pedal or disengage using the cruise control stalk.

It is easy to cancel AP.�

Jun 4, 2016

JeffK See that's what I'm saying too because the autonomous braking disables if you hit the gas AND if you hit the brake.�

Jun 4, 2016

ohmman I don't think it will venture off-road in most cases, if not all. It's too unpredictable what the off-road terrain looks like. It could be a cliff, or a marsh. I would imagine it's much more like to avoid the accident while staying on the roadway.

Your second point is the favorite of AI philosophers when thinking ahead to full autonomous vehicles. If a car can choose between veering off the bridge and killing the occupant(s), or having a busload of school children veer off the bridge, how should it prioritize? Rhetorical, let's not get into that one...�

Jun 4, 2016

Johan Uhuh, you just got yourself in to it by discussing it. Besides this is really interesting stuff to think about not mostly specifically because it has to do with Tesla or even electric transport. No, it's because it is an example of a problem we will soon have to face in reality which puts the spotlight on the fact that we will soon experience more and more instances of where the non-general (I.e. Task specific) AI system gets better than its self at whatever it is we wanted it to do (in this case avoid accidents while driving).�

Jun 4, 2016

Alketi If a collision is imminent, without any possibility of avoiding it, the car is not apt to make complex moral decisions. It would most likely be programmed to just apply the brakes.

Autopilot doesn't guarantee zero accidents, and all things being equal, reducing speed is a fairly reliable way to reduce damage given a no-win scenario.�

Jun 4, 2016

Johan I agree that in the utilitarian way hitting the brakes to reduce total damage makes sense, but it's not a default choice, it is an active choice to do this (for example hot the brakes and hope to make the most total damage) instead of for example swerving away (to avoid hitting a probable child) but in the process likely hitting the car next to you. So the system must either be programmed so that it can assign values to outcomes (how desirable/undesirable is the outcome?) out it must learn over time how to assign value (it will learn through its judging its choices and adapt to how we want it to act).�

Jun 4, 2016

FloridaJohn This topic is really getting off thread, but the real question is how often do humans make the right choice? A self-driving IA only has to be better than that. It does not have to make the "perfect" decision.

When we get to that point, I really don't think it will be a problem. The car will have a much better reaction time than a human (and could also have much more information). It will easily be able to solve the problem in a way humans can't even comprehend.�

Jun 4, 2016

zenmaster The problem is not avoiding things. It is negotiating less trivial, yet common scenarios. When it gets confused it will stop and block the flow of foliowing traffic. And how can self-driving AI understand the simple and common scenario of a police officer providing necessary direction? There might be a new market for "summon assist" whereby a call is made for an authorized driver to rescue stranded AP cars.�

Jun 4, 2016

JeffK Google's car can read the hand signals of cyclists so my guess is reading hand signals of police is not that far off...�

Jun 4, 2016

ohmman So, I guess someone flipping the bird will be crystal clear.�

Jun 4, 2016

zenmaster Nope, police do not use anything remotely similar to standard gestures and incorporate a whistle sometimes. They often need to signal movement around obstacles with a particular route to follow. This gestured route nor its context would be apparent to the AP. And of course police often need to provide some verbal directions at incident scenes. Cop to AP car "go back the way you came" would work out great.�

Jun 4, 2016

FloridaJohn Looks like you changed the original premise we were discussing. A vision system will either be able to interpret the signals from a police offer infer/construction worker or there will be a small radio bacon set up to relay instructions to the car. And, once again, the car doesn't have to be perfect, just better than humans.�

Jun 4, 2016

zenmaster Sure, you could always force police/construction/emergency workers to accommodate the limitations of the car where possible. Sort of defeats the point of "full autonomy' though. Also you'd have to precisely diagram the appropriate route through a temporarily obstructed intersection pretty quickly before an AP car might arrive. And of course, the situation changes completely as obstructions are moved. Or how about the simple case of lane closure with single lane handling two way traffic? Everything can be made to work better if you assume laws will be made to require authorities to assist with the tech's inadequacies.�

Jun 4, 2016

Garlan Garner Full Autonomy? I wonder if its possible to get full autonomy for $35K.�

Jun 4, 2016

ecarfan I am not aware of anyone seriously proposing that a base price Model 3 will have any version of AP. It will be an option.

Or maybe what Elon means by "the obvious thing" is simply Part 2 of the Model 3 reveal where much more detail is shown in regards to the performance capabilities of the car and the "like driving a spaceship" control interface.

It may be too soon for Tesla to show AP V2.�

Jun 5, 2016

cronosx my point is.. it's stupid.

you have autonomous braking, but it's usefull only in AP, so it's not a security feature, it's only how the AP is going to brake where active.

we are talking about an emergency breaks, wich can ( and surely in the most case is ) when you are not in AP or when the AP doesn't work in the way you expect

in this case you are surely doing somethink like hitting the gas while and maybe you don't see something that the camera see ( like a pedestrian on you right corner or similar )

that's correct, in a speak they showed the car interpreting the hand-gesture of the police

but then.. they have a point sayng that the police could use 'strange' sign that che car cannot comprend, but we are talking a future where the car can really really go from A to B alone.

and the law is set.

but then, in this case i think is safe to assume that there will be some sort of online police instruction or similar for the case like "it's obstructed, find another path"�

Jun 5, 2016

electracity The current version of AP is likely most helpful for building maps. The next version will begin to build the dataset that leads to full autonomy. Tesla likely needs as many cars running AP 2.0 as soon as possible.

Once autonomy becomes a sure thing, cities may compete to install infrastructure to make solving various problems easier. For example, a radio beacon system could provide precise vehicle location for bad weather, and the police could have access to a map that would close lanes. Busing will have to be re-thought, and should improve. Car sharing may be less of a thing than expected, with small vehicle public transportation becoming more of a thing.�

Jun 5, 2016

melindav you must be in a city with a much larger transportation budget. Portland's streets are so bad voters just approved a 10 cent per gallon gas tax to fund road repairs. They are at an emergency triage level and the city will not be doing anything beyond fixing the largest issues on the business streets.�

Jun 5, 2016

electracity Just a city that pays bus drivers to often drive near empty buses 24/7.�

Jun 5, 2016

melindav most cities, transit is a private company, not a city department. and those that are, it is separate from the transportation department.�

Jun 5, 2016

electracity Doesn't matter who owns it. To eliminate bus drivers requires an all-weather infrastructure for autonomous vehicles.�

Jun 5, 2016

melindav you have missed the point. Your comment elude that since the transit company has excess drivers, they could fire those employees and take that payroll savings and put it toward the city's infrastructure that would further deplete their business. That is not happening.

Again, most transit companies are not part of the city's budget. They operate on their own funds and the only responsibility to the city's infrastructure they have is maintaining their own bus stops, lightrail stations, park-and-rides, etc. most around here are pretty lean and the only empty buses are those going back to the garage.

Which has nothing to do with the thread topic of the Model 3 being fully autonomous.�

Jun 5, 2016

Raindog1 "we will do the obvious thing" and "steering will feel like a spaceship"

Since the "mousetronauts" don't fly the spaceship, and a recent article stated that Tesla wants to share the Autopilot data with the Department of Transportation... I think the obvious thing is AP will be capable of being fully autonomous on the MS, MX, M3.

I just hope they don't go to the extent of the "spaceship" steering.... I'm not ready to let go of a steering wheel! �

�

Jun 5, 2016

electracity You are going to find that the future is a scary place.�

Jun 5, 2016

zenmaster Just watched Shashua's 2016 CES presentation, where he specifically mentions level 5 as "fully autonomous". Which of course follows

SAE. Since this fact contradicts assertions made in this thread, there definitely is confusion about the term and what it implies. This confusion is helped along by hype.

![[?IMG]](https://qph.is.quoracdn.net/main-qimg-1a0f9494c2a60f60de12514d3a4f758e?convert_to_webp=true) �

�

Jun 5, 2016

McFlurri As a Transit Operator myself, I have often thought about the advent of autonomous buses, and how that will affect my career.

I see myself in a safe and secure job for approximately the next 10 years. After which time, I feel like I will still have a job, but in a different capacity, similar to a Pilot. Planes can fly themselves today, but there are still pilots for those emergency situations. Also fulfill more of a customer service role in assisting riders with disabilities and whatnot (not that i don't already do that, but wont have to worry about many other things at the same time).

Also, I think there would still be an actual person in the seat so if anything does go wrong, we get blamed for the incident rather than the company (oh, the Operator should have prevented the collision... they should have intervened!)

As for the empty buses comment. From experience I can say that there are many times my bus is about 25% full and other that it's 100% full. It comes down to how the transit company sets the schedule. They should have the tools in place to accurately determine when the peak ridership times are, and adjust accordingly so as to not have empty buses. A 40' bus does around 45-55L/ 100km. If the bus even only has 15 passengers on it, then the effective fuel economy is 3.3L/ 100km (per person)... that is potentially taking 15 cars off the road. And since there is not any ICE car that has that good fuel economy, the environmental impact of public transportation is effectively waaay better.

Yes there are times where I have less passengers, but there are also times where I have way more. Plus, we aren't even talking about congestion. A bus can hold more people in a smaller space which frees up the roads for the rest of us!�

Jun 5, 2016

JeffK That's cool, but the SAE is not NHTSA, and NHTSA doesn't define a "level 5"

U.S. Department of Transportation Releases Policy on Automated Vehicle Development | National Highway Traffic Safety Administration (NHTSA)

Yet, if the volume goes to 11 then anything is possible.�

Jun 5, 2016

zenmaster But Tesla's (and other's) car tech advancements follow the more practical approach of MobileEye, who is the industry-defining leader currently. I personally would not trust a "summon" delivery on anything less than Level 5. It's also irresponsible to make a statement claiming this would work reliably in 2 years.�

Jun 5, 2016

JeffK NHTSA is a government agency, they would need to first define a level 5 driverless car and set regulations surrounding such a thing before something like that would be allowed to cross state lines etc. At least we'd hope haha�

Jun 5, 2016

Discoducky What if autonomous cars were already operating for millions of miles today? Would two years for mass consumption seem irresponsible?�

Jun 5, 2016

zenmaster "Fully autonomous" vs "autonomous" - the gap between the two is incredible vast. Watch the presentation which describes the current state of the art on the subject. The biggest challenge right now, and one that is currently being worked out for the next gen systems, is "driving policy" where active context identification allows car to time and plan movements around objects and among bad drivers.�

Jun 5, 2016

ohmman Baidu's path to autonomy is different from that of Google and of Tesla. Andrew Ng has discussed how he feels the best way to train a good model is to give it a "known" path that it repeats. Learning the roadway and route becomes a very easy training task, so the model can "focus" on the isolated incidents (cyclists, pedestrians, etc.) To that end, Baidu is deploying driverless buses in China. I think it's a novel and valuable approach.

What would be great is if each of the many entities in the space could collaborate instead of keeping their data as proprietary. The machine learning field is a field that values openness and sharing, so it's possible this will occur. Tesla sharing their data with the DOT is a start.�

Jun 5, 2016

omgwtfbyobbq My guess is they can pull off L4 on the highway and L3 in the city around the time the 3 launches. L4 on the highway would be super awesome IMO.�

Jun 5, 2016

FlatSix911 Interesting NHTSA summary ... too bad they don't recognize Level 5 as the SAE does.

NHTSA defines vehicle automation as having five levels:

No-Automation (Level 0): The driver is in complete and sole control of the primary vehicle controls � brake, steering, throttle, and motive power � at all times.

Function-specific Automation (Level 1): Automation at this level involves one or more specific control functions. Examples include electronic stability control or pre-charged brakes, where the vehicle automatically assists with braking to enable the driver to regain control of the vehicle or stop faster than possible by acting alone.

Combined Function Automation (Level 2): This level involves automation of at least two primary control functions designed to work in unison to relieve the driver of control of those functions. An example of combined functions enabling a Level 2 system is adaptive cruise control in combination with lane centering.

Limited Self-Driving Automation (Level 3): Vehicles at this level of automation enable the driver to cede full control of all safety-critical functions under certain traffic or environmental conditions and in those conditions to rely heavily on the vehicle to monitor for changes in those conditions requiring transition back to driver control. The driver is expected to be available for occasional control, but with sufficiently comfortable transition time. The Google car is an example of limited self-driving automation.

Full Self-Driving Automation (Level 4): The vehicle is designed to perform all safety-critical driving functions and monitor roadway conditions for an entire trip. Such a design anticipates that the driver will provide destination or navigation input, but is not expected to be available for control at any time during the trip. This includes both occupied and unoccupied vehicles.�

Jun 5, 2016

1208 Presumably there are Mules going around with full autonomy. Presumably they are X and S mules. Time to start a Full-autonomy mule leaked pictures thread...�

Jun 5, 2016

JeffK Andrew Ng has been in the AI world for a long time but is missing the point of how MobilEye and others are doing things. MobilEye's approach more closely resembles the human brain in that you have separate areas of the brain that have different functions. MobilEye uses separate deep neural networks that have different areas of focus. A network that focuses on pedestrians or cyclists, will not ever be used for path planning so there's no need to give it a known path. Another network is used for path planning. Of course, the output of the pedestrian network might be used as an input to the path planning network, but there's no reduction of resources in doing both.

Andrew Ng's recent twitter post about how he thought Tesla releasing autopilot was irresponsible caused me to lose some respect for the man.�

Jun 5, 2016

EVNow A car is fully autonomous if it can navigate on its own in a large chaotic city on its own - say Calcutta.

I bet Google car won't do it today - heck most of us can't do it.�

Jun 5, 2016

S'toon I don't know about Calcutta, but if it's anything like Delhi, don't drive there.

�

Jun 5, 2016

ohmman Andrew is certainly taking the more cautious approach. I don't know that I'd say he's missing the point, though - he fully understands it and respects the power of deep nets. I think you mistook my comment about "focus" to mean the models only look at that. What I mean is that those features can be more appropriately weighted by the network.

Regarding the above quote, while I don't agree with his assessment, I also don't think it's an indefensible position. Two reasonable people could have a healthy disagreement about this, so it would be hard for me to lose respect for him over it. I find him to be a very generous and caring individual who also happens to be a great ML researcher and teacher.�

Jun 5, 2016

EVNow I guess the best parts of Calcutta would be like New Delhi �

�

Jun 5, 2016

EVNow There was an interview with NHTSA director (IIRC) sometime back on NPR. He basically said the department is waiting for someone in the industry to bring a car to them - they'd look to approving the cars on a case-by-case basis in the beginning. IOW, no detailed regulations/guidelines in the beginning.�

Jun 6, 2016

gregincal Which is my point exactly. So the answer to the question of whether the Model 3 will have full autonomy IMO is definitely no.

It's confusing to have two different ratings.�

Jun 6, 2016

DrivingTheFuture Looks like the 23.5% of us who voted yes to full autonomy are not alone in our prediction...

Elon Musk did everything but confirm that Tesla Model 3 will be fully autonomous [Video]

Tesla's Elon Musk hints Model 3 will be fully autonomous | ZDNet

To clarify, in my opinion it will be hardware capable at launch... but not necessarily ready to activate the feature...�

Jun 6, 2016

gregincal But that means nothing. Autonomy is all about software. Software could easily take another 5 years or more. Witness "in the next few months" becoming over a year to get out the current autopilot software, which is about 1/1000th as difficult as full autonomy.�

Jun 6, 2016

Booga Fully autonomous isn't a big need on my part nor is city driving. Just get better and better at highway driving and I'll be happy. I don't need it for short drives or construction zones, but would find it to be the most helpful if it could continue to improve highway travel and decrease driver involvement. That said, if it is fully autonomous, that would certainly be a huge convenience.�

Jun 6, 2016

Alketi As a software engineer, I have to disagree. While full autonomy might not fit your timeframe, having the hardware be capable of it means everything.

For one, all the algorithms can be tested (silently) across hundreds of thousands of vehicles. For two, incremental improvements can be constantly delivered. And lastly, when full autonomy software is ready (and approved by regulators) you won't need to buy another car to get it. A software upgrade is called a patch. A hardware upgrade is called a recall. You want the patch. �

�

Jun 6, 2016

1208 I just want to know what it is its annoying...

�

Jun 6, 2016

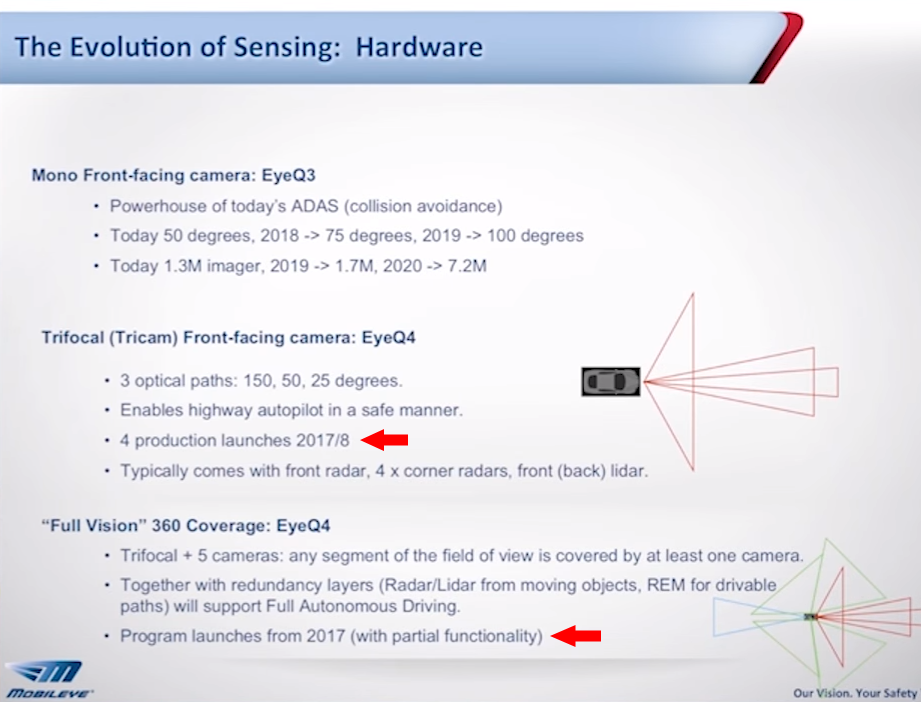

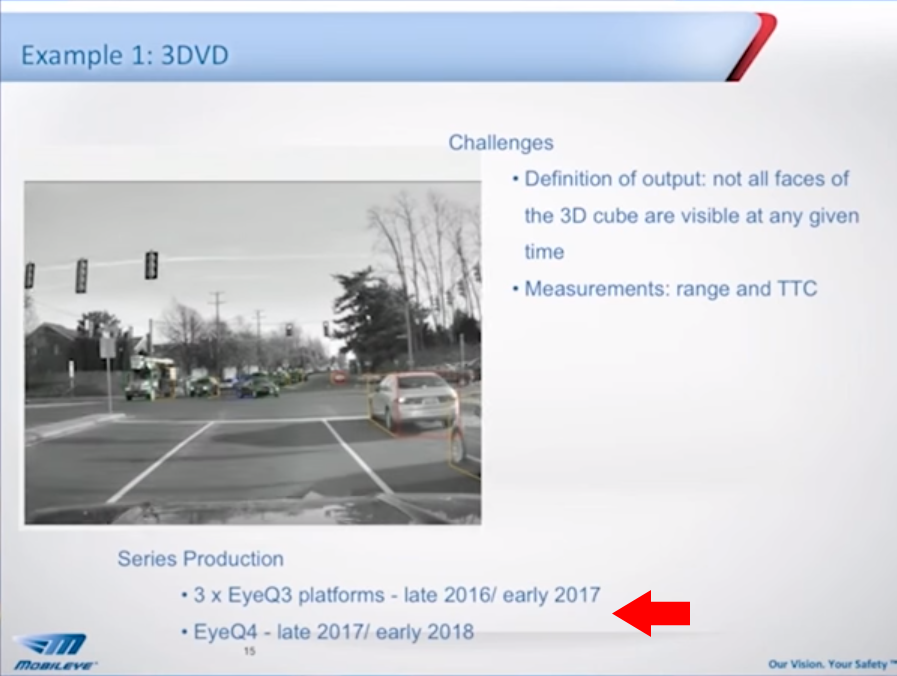

favo From Amnon Shashua's (MobilEye CTO) CES 2016 presentation on Jan. 7, 2016 (embedded at bottom of post), I think it's entirely possible that EyeQ4 will make it into Model 3 from the beginning. It looks to me like Elon's end of year announcement will be about AP 2.0 with new hardware and software. I'm guessing it will be the "Full Vision" hardware suite with multiple cameras, out on Model S/X first with network of three EyeQ3 chips, then later next year with EyeQ4. With any luck, Model 3 will get the same sensor suite and EyeQ4 from the launch. It's also possible Model 3 will launch with the triple EyeQ3 setup, if EyeQ4 isn't quite ready in enough volume. Time will tell.

Discussed at about 21:20. Note the four launches for Trifocal EyeQ4 in 2017 and 2018 and Full Vision EyeQ4 in 2017 (probably late 2017, see below) with partial functionality. Could include revamped Model S/X as well as Model 3 afterwards. The comment about partial functionality lines up with Tesla's way of rolling out the hardware sensors first and then doing OTA software updates with new features that use that hardware.

Discussed at about 26:30. Note mention of implementation on network of three EyeQ3 chips late this year (matching Elon's end of year announcement). Then EyeQ4 is slated for late 2017/early 2018, nicely matching with Model 3.

Full presentation:

�

Jun 6, 2016

Alketi Really nice post favo. On the bright side, it seems fairly clear that the Model 3 will at least ship with the upgraded sensor suite that's due to be announced end-of-year, which means (worst case) something close to full highway autonomy.

I wonder how much they'll push for the EyeQ4 for the Model 3, however, keeping in mind the incredible time-sensitivity of the massive launch ramp. If the EyeQ4 is planned for, but isn't ready or isn't shipping in the necessary quantities, then Tesla will have a production disaster on their hands.

For that reason, I suspect Tesla will be conservative with the Model 3, and choose the EyeQ3 platform. [I'd love to be wrong]

However, if they're feeling generous, they could still install the cameras and sensors necessary for Full Vision, only use the front cameras with EyeQ3, and then offer a "module upgrade" at a later date, swapping out the EyeQ3 hardware for EyeQ4 (which would make use of the additional cameras). They'd have to be feeling pretty generous to do the extra engineering work and eat the up-front sensor costs, but that's the sort of extra effort that causes your customers to become brand evangelists who make their own car commercials in their spare time.�

Jun 6, 2016

JeffK I think that meant 3 platforms using EyeQ3 chips not 3 EyeQ3 chips. We know as of late 2015 there were "two programs" by one or more OEMs using 5 connected EyeQ3 chips.�

Jun 6, 2016

gregincal Until you've written the software you can't be sure that the hardware is indeed sufficient. However my main point is that if the software doesn't exist you cannot claim that the Model 3 has full autonomy. It's like saying you have a robot that can play chess because it can move the pieces, now you just need to write the part where it knows how to play chess (but it's just software).�

Jun 6, 2016

Alketi Tesla's not claiming the Model 3 has full autonomy. Nor would they make that claim for the S/X. "Hardware" means it can be upgraded later, to the extent the hardware supports. We'll likely find out the extent of the hardware capability later this year. But, hardware support most certainly does not mean "nothing".

![[?IMG]](https://lh3.googleusercontent.com/blogger_img_proxy/AEn0k_vdbET4ACDKwIrG6nKoUGOv7ZLlrlqv7UFowBOqXtQYQtrTeRqJHYwRyLrJnypJvl058Yc0k-ZNRQOGSX1RhLw4iA=s0-d) �

�

Jun 6, 2016

Topher It's not clear to me why anyone would expect a auto-pilot to be making moral decisions. If there is some situation which can be determined with sufficient clarity that a human would make a certain moral decision, the auto-pilot can be programmed to do the same thing. The hard decisions are the one for which there are a lot of unknowns, and I wouldn't classify those as moral decisions, for precisely that reason.

Thank you kindly.�

Jun 6, 2016

JeffK Personally if in an emergency situation if I had time to make a moral decision I'd have time to brake. It's more than likely I'm going to hit what I don't see until it's too late. I don't think the majority of people are making moral decisions when they have accidents.�

Jun 6, 2016

DrivingTheFuture VERY informative. I think where you marked with second red arrow is the biggest clue yet... "program launches from 2017 with partial functionality"... hmmm what manufacturer has done this in the past other than Tesla? Seriously is anyone else doing OTA updates that significantly upgrade hardware functionality???�

Jun 6, 2016

DrivingTheFuture Maybe Elon would give reservation holders the choice on whether or not they want to delay delivery another 6 mos and have full autonomous capability...�

Jun 6, 2016

JeffK Make no mistake the hardware needed is going to be ready in plenty of time it's the DNN models which might need to be updated so that's an OTA update. It's already well known exactly what hardware (sensor-wise) is necessary.�

Jun 6, 2016

ohmman I'd like to respond in case this post is in response to the philosophical point I raised earlier. The AI philosophers like to discuss what's going to be happening years ahead of where we are now, so the idea is that the autonomous software knows and sees a lot more than we could ever see. It can see and evaluate the number of passengers in each car, the velocity of those vehicles, the exact trajectory, the probability of them steering in certain paths based on movements that the autonomous software makes itself, etc. In a heavily simplified version, you can think of the way you can teach a computer to play chess. It can evaluate every outcome and use probabilistic modeling to determine the right thing to do, and it does it nearly instantaneously. People used to say "once a computer can play chess, we've reached full AI."

If we teach a model to do this same type of thing, it's not going to be by "programming" it in the traditional way. It'll be a model that learns from humans and improves based upon its own expanded visibility. At that point, we will be expecting it to make moral decisions well beyond what our very limited human minds can comprehend. And that's why it's in the field of philosophy at this point.�

Jun 6, 2016

favo If you go to 28:00 in the presentation, he clearly says "there is one production which is coming sooner [than late 2017] which is running on three EyeQ3's..."

And he goes on to say that everything he's talking about is backed by actual production agreements with customers, not based on stuff in the lab or that is purely theoretical.�

Jun 7, 2016

JeffK I'm citing an interview with the CEO saying there were two using 5 EyeQ3 chips in late 2015. I posted the video in one of these threads

What the CTO is saying in that talk is that there's going to be a production vehicle using 3 EyeQ3 chips to do 3D vehicle detection he's not saying it's the first ever 3 chip vehicle.�

Jun 7, 2016

Topher Who said anything about programming in the traditional way? If it is copying what humans do, then it will be copying humans morals as well (for good or ill). It isn't making new moral judgements. If it is not copying humans, we have more pressing problems.

Thank you kindly.�

Jun 7, 2016

JeffK It's not copying humans for moral decision making... how could it unless you have a whole bunch of instances where people chose between a puppy and a small child. I really don't think people are worried about moral decision making. If you can avoid the accident entirely then why not do that instead.�

Jun 7, 2016

Topher It's copying human behavior, how could it possibly do that and expressly remove the moral aspects? How does it know which actions are morally motivated and which aren't?

People are worrying, see further up in the thread for examples. It comes up every time autonomy is mentioned.

These philosophical moral questions are always couched in absolute terms. Avoiding the accident is never allowed to be one of the options. Don't blame me for that.

Thank you kindly.�

Jun 7, 2016

gregincal OK, this is getting confused. Of course Tesla isn't claiming they have full autonomy, but the original poster claims it will be there are launch of the Model 3. That's what this whole thread is about. I'm just saying that believing that they will have hardware capable of eventually providing full autonomy but not the software is very different from what the OP of this thread is claiming.

(In other words, hardware support is only meaningless in terms of being able to claim that the Model 3 actually has full autonomy, not in terms of being able to get there.)

Edit: People probably feel I'm being pedantic in my posts, but full autonomy is a holy grail that is going to be hugely disruptive to the entire automotive industry, on a far greater scale than anything to do with electric cars. And yet people talk about it like it's going to just be another cool feature of the Model 3.�

Jun 7, 2016

JeffK I'd argue that the vast majority of accidents have zero moral aspects. I'm sure we can think up several possible scenarios that do possibly, but the majority in the real world don't.�

Jun 7, 2016

DrivingTheFuture Actually you and I are of the same mind/ opinion. Notice the bold from my initial post in this thread:

�

Jun 7, 2016

gregincal The part I disagreed with was functional by launch time. Also, I'm not necessarily convinced about the hardware. Mobileye is touting the EyeQ5 as being their full autonomy solution, due out a couple years after the Model 3. That doesn't mean they can't still make a major announcement of improvements to AutoPilot.�

Jun 7, 2016

favo Yes, I was responding to your statement "I think that meant 3 platforms using EyeQ3 chips not 3 EyeQ3 chips."�

Jun 7, 2016

DrivingTheFuture You know what you're right I see that I kinda contradicted myself there. I don't think it will be fully functional at launch anymore... but I'm sure hoping it is. I know it's a long shot.�

Jun 7, 2016

Omer WOW!!! Awesome find favo!

That speech should end all speculation on the Tesla message boards. Mobileye just described in detail Tesla's entire autonomous roadmap.

Essentially all the hardware for autonomy is understood at this point and widely available except the processor. The camera's, radars and/or lidar are not expected to change or even improve much. The camera's will retain 1.3mp for low light sensitivity (will upgrade in 2020). The only reason the full hardware suite hasn't been deployed is because it's pointless without the processor. They will need EyeQ4 which won't be available till end 2017. Except Tesla will use 3 EyeQ3's starting at the end of this year to accomplish the same processing power.

So all Tesla's starting the end of this year will come with the entire autonomous hardware suite capable of full autonomy. Whenever EyeQ4 is ready, Tesla will simply switch from 3 EyeQ3's to a single EyeQ4 and leave the rest of the sensors the same. This makes a lot of sense in relation to Model 3 because Tesla does not want any possible delays and this way they are not dependent on production of EyeQ4 which would be cutting it way too close for Model 3.

In 2019 they upgrade the camera's from 1.3mp to 1.7mp and in 2020 to 7mp. Those upgrades would just improve sensing but would not prevent cars produced from the end of this year forward to utilize the full features of complete autonomy.

Software is another story entirely. The speech makes a strong case for the software being much closer than many expect. I don't think they will release full software until 2019 due to regulations. However, having full hardware starting the end of this year will give them all the necessary data to share with regulators.�

Jun 8, 2016

zenmaster Where did you read Tesla is doing 3 networked EyeQ3. Should be 5.

The EyeQ4 chip was sampling last year and could possibly make it to volume production in time for Model 3 production.�

Jun 8, 2016

JeffK The video he was referring to mentioned using three EyeQ3 chips for a 3D vehicle detection (3d bounding boxes). That's not the total number of chips in the vehicle, just how many are needed to do a single feature an EyeQ4 chip could do by itself.�

Jun 8, 2016

zenmaster The point is, where did the notion that Tesla will be going from 3 EyeQ3 chips to 5 originate?�

Jun 8, 2016

JeffK The notion that Tesla has already been using 5 EyeQ3 chips came from the MobilEye CEO in late 2015.�

Jun 8, 2016

melindav I just hope it will be able to learn where on the road I swerve around various potholes and ill-fitted manhole covers. I can think of at least a dozen locations on my daily drive where I move to one side or the other of a lane to avoid a bad bump in the road.

Personally, I think you are giving us humans too much credit when it comes to morals. Look around now a days. We are not exactly the most moralistic crowd, especially when it comes to driving. Can't tell you when the last week went by I didn't see a local hit and run story. Not to mention just common manners toward others.

And as others have said, in an emergency situation, the though process involved if you are going to hit a school bus or drive over a cliff has less to do with morals than self preservation.�

Jun 8, 2016

electracity Great presentation. One tricky part to figuring out what is going on is that Tesla's Autonomous driving staff may be as large as Mobileyes.

Also, a similar Google presentation would probably be equally persuasive. The simple truth is that Google had nothing Tesla could implement two years ago.

Whatever the core technology, the problems with edge cases to even implementing level 3 are very significant.�

Jun 8, 2016

zenmaster No. The next gen systems will be using 5, not 3. These have yet to be deployed in production. This is getting silly.�

Jun 8, 2016

favo In another informative presentation, Shashua discusses detecting bumps, potholes, and debris. He says they can detect road contours at the millimeter level, and later he talks about detecting debris that is 10 cm high, 50+ meters away (like a tire). Starts at about 10:59 in the video.

10 cm high tire detection:

4 cm bump detection:

�

�

Jun 8, 2016

Booga That is actually very cool - I'm glad you shared that, because it was on my mind. These cars could create a database of road conditions that local governments might be able to use to identify things that need to be fixed up.�

Không có nhận xét nào:

Đăng nhận xét